Creating an account has many benefits:

- See order and shipping status

- Track order history

- Check out faster

SPSS-based ANOVA

Summary

Currently, there are four main methods used for ANOVA in SPSS: one-way ANOVA based on the "one-way ANOVA" procedure, one-way ANOVA based on the "univariate" procedure, two-way ANOVA without repeated observations based on SPSS software, and ANOVA with repeated data such as cross-grouping based on SPSS software.

Principle

The basic principle of one-way ANOVA using SPSS software is that when k (k ≥ 3) overall averages need to be compared, 1/2k (k-1) differences will be generated, and if these differences are to be tested one by one, the probability of committing a type Ⅰ error will be greatly increased with the increase of k, leading to an increase in experimental error and a decrease in the precision of the estimation. Therefore, it is not possible to apply t-test or u-test directly for hypothesis testing between two means. For this reason, statisticians have proposed a method to test for the presence of significant influences in a k ≥ 3 system, which is essentially a quantitative analysis of the causes of variation in the observations and is called an analysis of variance (ANOVA).

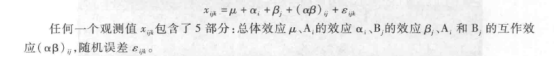

(1) Linear model and basic assumptions

Assume that a single-factor experiment has k treatments, each treatment has n repetitions, and there are a total of kn observations. The data structure of this kind of experimental data is shown in Table 5-1.

(2) Sum of Squares and Degrees of Freedom Profiles

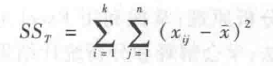

In Table 5-1, the total variation of all observations is the sum of the squared deviations of the observations x from the total mean x, denoted as SSr, which is given as:

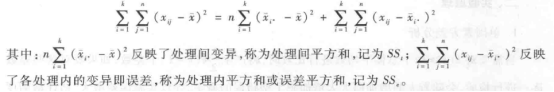

The decomposition is obtained:

② Segmentation of Total Degrees of Freedom In calculating the total sum of squares, each observation in the data is subject to the condition that "the sum of the outlying differences is 0", so the total degrees of freedom is equal to the total number of observations in the data minus 1, i.e., the total degree of freedom, dfT = kn -1. The total degrees of freedom can be segmented into two parts: inter-treatment degrees of freedom, dft = k -1, and intra-treatment degrees of freedom, dfe = kn - k = k(n - 1). The sum of the squares of the components divided by their respective degrees of freedom yields the total mean square, the inter-treatment mean square and the intra-treatment mean square, denoted as MST, MSt and MSe, respectively.

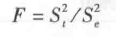

(3) F-distribution and F-test

In a normal population N (μ, σ2), k samples with sample content n are randomly selected, and the observed values of the samples are organized into the form of Table 5-1. Thus, both  and

and

F has two degrees of freedom: df1 = dft = k - 1 and df2 = dfe = k(n-1). If a series of samples from this aggregate is continued for a given k and n, a series of F values are obtained. The probability distribution of these F-values is called the F-distribution. The critical values F0.05 and F0.01 can be found from the table of critical values for F.

The F-test is a method of inferring whether the variances of two aggregates are equal by the magnitude of the probability of the F-value occurring. In a one-way ANOVA, the null hypothesis is H0: μ1 = μ2 = ..... = μk, and the alternative hypothesis is HA: the μi are not all equal. If F < F0.05 ( df1, df2 ), i.e. P > 0.05, accept H0, indicating that the difference between treatments is not significant; if F0.05 ( df1, df2 ) ≤ F < F0.01 ( df1, df2 ), i.e. P ≤ 0.05, negate H0, accept HA, indicating that the difference between treatments is significant; if F ≥ F0.01 ( df1, df2 ), i.e. P ≤ 0.01 , negate H0 and accept HA, indicating that the differences among treatments are highly significant.

(4) Multiple comparisons

① Least significant difference method. The method of least significant difference (LSD) is the simplest method of multiple comparisons, and the steps of multiple comparisons using the LSD method are as follows: list the multiple comparisons of the mean table, and the treatments in the comparison table are arranged top-down according to their means from the largest to the smallest; calculate the least significant difference between LSD0.05 and LSD0.01; and compare the difference between the two means in the multiple comparison of means table with LSD0.05 and LSD0.01. The difference between the two means in the table of multiple comparisons of means is compared with LSD0.05 and LSD0.01, and statistical inferences are made.

The scale formula for multiple comparisons of LSD method is:

② Duncan method The Duncan method considers the difference of the means as the extreme deviation of the means, and uses different test scales according to the number of treatments included in the range of the extreme deviation (known as the ordinal distance), k, in order to overcome the shortcomings of the LSD method. These different test scales depending on the rank distance k at the significant level α are also called the least significant extreme deviation LSR.

The formula is:

The basic principle of two-factor ANOVA is that when the trait under study is affected by two factors at the same time and two factors need to be analyzed at the same time, two-factor ANOVA can be performed. The relatively independent role of each factor is called the main effect of the factor (main effect); a factor in another factor at different levels of the effect of different, there is an interaction between the two factors, referred to as interactions (interaction). Interaction between factors is significant or not related to the utilization value of the main effect, if the interaction is not significant, then the effect of each factor can be added, the optimal level of each factor combined, that is, the optimal combination of treatments; if the interaction is significant, then the effect of each factor can not be directly added, the selection of the optimal treatment should be based on the direct performance of the combination of the treatment selected.

(1) Two-factor ANOVA without repeated observations

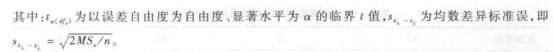

Unduplicated observations means that each treatment is not duplicated, i.e., assuming that there are a level of factor A and b levels of factor B, and there is only one observation for each treatment combination. The data structure of the unduplicated data is shown in Table 5-2.

The linear model for the observations in the two-way ANOVA is:

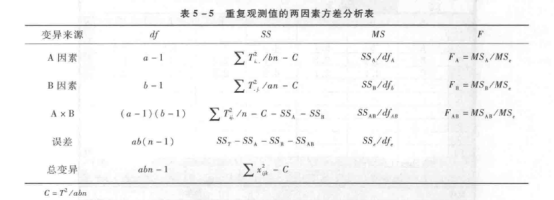

The results of the two-factor ANOVA with no replicated data can be summarized in the form of Table 5-3.

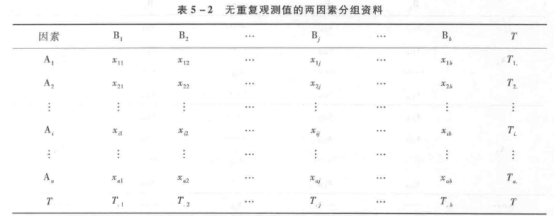

(2) Two-factor ANOVA with repeated observations

In a two-way ANOVA without repeated observations, the estimated error is actually the interaction of the two factors, and this result holds only if there is no interaction between the two factors, or if the interaction is small. However, if there is interaction between the two factors, the experimental design must be designed with repeated observations, which can estimate the interaction as well as the error at the same time. A typical design for a two-factor experiment with equal replicated observations is to design n replications for each combination of different factor levels, assuming that factor A has level a and factor B has level b. The data pattern is shown in Table 5-4.

The linear mathematical model for two-factor information with repeated observations is:

The results of the two-factor ANOVA for repeated observations can be summarized in the form of Table 5-5.

For more product details, please visit Aladdin Scientific website.

- Help & Customer Service

- FAQs

- What do the terms MSDS, SDS, COA, and TDS in the chemical industry mean? How do they differ?

- What is the purpose of an isotype control? How are they selected?

- Why are our chemicals so much less expensive than the same chemicals sold by your competitors?

- What are MSDS and SDS?

- What are the requirements to become an Aladdin distributor?

- Are there any discounts or sales rebates for distributors?

- What is the duration of the distributor agreement?

- Will I receive training and support as a distributor?

- Under which law is the distributor agreement governed?

- The differences between registered users(with a profile) and non registered users

- Delivery and receipt specification

- What is a third -party express or logistics company delivery?

- My order was charged the freight. If the return in the later period, will the previous freight be refunded to me?

- What should I do if the goods are less/the product is wrong?

- What should these products be used for and who should use them?

- Why are chlorophosphonate liposomes useful for studying macrophage function?

- What is the CAS number?

- What dose of antagonist, agonist, or signaling tool should be used in vivo?

- Aladdin product purity and quality: what is it and how is it determined?

- What dose of an antagonist, agonist, or signaling tool should be used in vitro?

- How do I determine if a compound is cell-permeable?

- What should I do if I can't see any product in the vial?

- Should different batches of a product look the same?

- What is the molecular weight and molecular formula?

- How do I dissolve my product?

- How should I store my product?

- Effects of storage on solubility

- Why does Aladdin‘s Biochemicals sometimes ship chemicals at room temperature when the vial is labelled 'store at +4°C or -20°C'?

- What is the half-life of clodronate (liposomes)?

- Pack sizes and weighing accuracy: do I need to reweigh my product?

- I did not observe macrophage depletion, what can be the reason?

- My animal has died immediately after injection of (clodronate) liposomes。

- How to know how much peptide is in my vial?

- My animal has died several hours or days after injection of (clodronate) liposomes.

- How should I store and use lyophilized peptides?

- How should l store the liposomal suspension?

- What is the concentration of the clodronate liposome suspension?

- How should I dissolve and store peptide solution?

- What is the size and charge of the liposomes?

- ln which medium are the liposomes suspended?

- Dissolved amino acids: how to prepare a solution with 1 equivalent of NaOH?

- How can l detect the Dil-labeled liposomes?

- What types of experiments are our products eligible for?

- I need the SDS and Certificate of Analysis for the product that I purchased.

- Where can I get protocols for using your products?

- GHS Classification

- How to obtain a quality inspection certificate (COA)?

- Does aladdin test for endotoxin in their antibodies?

- What should I know about the stability of your protein products?

- Which isotype are Aladdin’s polyclonal antibodies?

- What endotoxin level should be expected when purchasing Aladdin proteins?

- How are your antibodies purified?

- Why can’t I see the protein pellet in the vial?

- Can you tell me what epitope your antibody binds to?

- Which cytokines show cross-species activity?

- Have Aladdin’s antibodies been tested in neutralization assays?

- What is the relationship between the specific activity expressed as an ED50 and as units/mg?

- Are Aladdin’s antibodies suitable for use in ELISA and Western Blot applications?

- What information should be known about the stability of your antibody products?

- Do Aladdin’s antibody products contain any carrier proteins or other additives?

- What is the relationship between specific activity units and International Units of activity?

- How does Aladdin obtain International Units of activity?

- Will Aladdin antibodies work in immunohistochemistry and immunocytochemistry applications?

- Will Aladdin antibodies recognize target proteins sold by other vendors?

- Will Aladdin antibodies recognize target protein in complex biological fluids such as blood or serum?

- What are the differences between encapsulation efficiency, loading capacity, and yield?

- How can micelle stability be improved?

- Can nanoparticles be used for oral drug delivery?

- What is the ideal size for a nanocarrier?

- What strategies can be used for the extended release of peptides for one month or more?

- When considering PEGylation for a protein drug, how do you decide what PEGylation chemistry to use?

- What are Building Blocks?

- What are the applications of Building Blocks in drug development?

- What are the common types of drug Building Blocks?

- What are the substituted functional groups of the Building Blocks?

- What role do Building Blocks play in new drug development?

- Which Building Blocks are more popular in medicinal chemistry?

- What are the main competition barriers of molecular Building Blocks industry?

- What is the application scale of molecular Building Blocks in drug development?

- What are the targets of molecular Building Blocks?

- What are the synthetic techniques of molecular Building Blocks?

- My DMSO-d6 appears to be a solid. What can I do?

- How can I measure acidity levels in D2O solutions?

- Should my NMR solvents be handled in a special way?

- Does my isotope solvent require special storage?

- What are the 3 most commonly purchased isotopes in trace element analysis and their quantity of supply?

- What type of mineral oil is contained in the 6Li metal, and how would this be removed?

- What are the key criteria for selecting stable isotope-labeled standards for clinical measurements?

- What is meant by descriptions such as TC treated/no TC treated that appear in product descriptions such as cell culture dishes?

- Which surface should I use to grow suspension cells?

- How should closed-cap cell culture flasks be used? What is the difference between the use of the lid with the filter membrane?

- What do we often hear about T25 or T175?

- I used i-Quip® surface treated culture bottle/dish/plate, the cells do not adhere to the surface, is it a product quality problem?

- After removal from the liquid nitrogen tank, the cell cryogenic vials will occasionally burst. How to avoid it?

- What precautions should be taken when using cell cryogenic vials?

- What are the possible reasons for the cracking phenomenon of the centrifuge tube during centrifugation?

- Why is it necessary to add carrier protein to some recombinant protein solutions?

- Will glycosylation affect the biological activity of recombinant protein?

- How to operate the reconstitution and preservation of recombinant protein?

- How can I save the recombinant protein after re-dissolution?

- What are the main differences between the recombinant proteins produced by different expression systems?

- Why didn't I detect the activity of the recombinant protein in the experiment?

- Can recombinant proteins of different species be cross-used?

- What is the role of the protein tag? Does the fusion tag need to be removed during the experiment?

- Is it possible to use the vortex shaker to help the lyophilized powder fully dissolve?

- What is Friedel Craft reaction with example?

- What are the advantages of Friedel Crafts acylation?

- Is Friedel-Crafts alkylation reversible?

- How is a Lewis acid used in Friedel Crafts acylation?

- What is alkylation of benzene?

- Why does nitrobenzene not undergo the Friedel-Crafts acylation reaction?

- What are the limitations of Foucault alkylation reaction?

- What are the limitations of the Foucault acylation reaction?

- What is COA?

- Distinguishing between MSDS, COA, and TDS?

- How can I get a trial size product for free?

- Is there a limit to how many free trial size products I can receive?

- Will I have to pay anything for the trial size product?

- How will I receive the discount coupon?

- Can the discount coupon be used for any product?

- How do I apply to become an Aladdin distributor?

- What is the process after submitting the distributor application?

- Can I return products? What is the return policy?

- What happens if either party wants to terminate the agreement?

- Is there a confidentiality clause in the distributor agreement?

- Does the flow-through antibody to CD206 need to be fixed to break the membrane?

- Are CD8 and CD8α the same indicator?

- Is CD4(domain 1) CD4?

- Is CD44H a CD44?

- Is CD3ε a CD3?

- Is CD45.1/ CD45.2 a CD45?

- What is the difference between Mouse CD90 and CD90.1 and CD90.2?

- What is the difference between Human CD45RA and CD45RO?

- What is the concentration of the primary antibody?

- What does IHC mean on the data sheet? Is it frozen section? Is it different from IHC-F?

- How many slices can I do with 20μI when I have IHC lab-applicable antibodies?

- If the species of my experimental sample is not included in the Reactivity of the antibody, is there any after-sales service available?

- Can the antibody be used in applications that are not described in the package insert?

- How should I choose the secondary antibody?

- How to choose positive controls for antibodies?

- There is a disagreement between the molecular weight of the literature check and the specification, why are there several molecular weights for the same indicator?

- IHC experiment, wondering what are the antigen repair conditions? Should I use EDTA or potassium citrate?

- Can the protein be shipped as a lyophilized powder?Can it be shipped as liquid?

- What are the transportation conditions for proteins?

- Can proteins be used in cell culture/injected mice?

- Does the protein Buffer contain denaturants?

- Are recombinant proteins active? Is it possible to do an activity test?

- By what way are proteins purified?

- Label-free protein purification method?

- What concentration of BSA is recommended for WB experiments?

- How long does the BSA stay closed at room temperature?

- Lysate would like to ask what PMSF does with Na3VO4?

- Lysate lysate not lysed, add ripa and shake, then lysed on ice for 20 minutes and then centrifuged and got a gooey mess?

- How many cells are lysed in 100μl of RIPA protein lysate?

- Can cell lysate lysed cells be followed by immunoprecipitation?

- Can the lysate be used without sodium aluminate?

- Can I keep the protein at -20°C for a month after cooking with loding buffer?

- Can ECL be used in ELISA?

- How long can I keep it at -20°C? Can it be stored for a short time at 4°C or room temperature? Can it be stored at -80°C?

- Can I still use it if I accidentally store it at room temperature for a day?

- If I accidentally boil the 5x Sampling Buffer for 15min, is it still usable?

- Does the sample buffer have an effect on the effectiveness of the electrophoresis solution? If the buffer is accidentally run out does it need to replace the electrophoresis solution?

- Is it feasible to boil and denature proteins before adding protein upload buffer?

- Sediment has been found in this product, can I continue to use it? What should be done?

- Does the protein's own charge affect the buffer?

- If the concentration of the denatured sample is high, how should it be diluted?

- If the protein contains polymer heterobands, dimers may be present, do I need to add doubling buffer to open the dimers?

- When electrophoresis is performed on a sample denatured with buffer, if the sample does not move when the sample volume is high, and if the sample volume is low, the sample runs fast and unevenly, how to solve the problem?

- What is the reason that the protein added to the buffer cooks without precipitation, but the next day when the sample is removed and spiked, precipitation appears? What should be done?

- After lysing the cells first with the lysate, add 5x upsampling buffer in a 4:1 volume, then water bath denaturation is it better to use a 100 degree water bath or a 95 degree water bath?

- After adding buffer to boil denatured proteins for cryopreservation, do they still need to be boiled after they are removed again? Why?

- How can I quickly remove the bromophenol blue that may be obscuring the bands at the end of the gel run?

- What is the reason why the protein after denaturation by adding buffer tends to be difficult to sink and float up easily when spotting? What should I do to solve this problem?

- When extracting proteins from suspension cells, can I add the product directly to boil and denature them?

- Can it be used in non-denaturing electrophoresis?

- Is there a requirement for protein concentration for this product? If the protein concentration is high, do I need to increase the concentration of the buffer?

- After the protein sample has been spiked with Sampling Buffer, boiled at 95°C for 10 min, and centrifuged at 14,000, individual samples will be delaminated prior to sampling, what is the reason for this?

- Protein samples boiled in buffer are blue in color and turn blue-violet after being left at -80°C. What is the reason for this? Does it affect the subsequent electrophoresis?

- BCA concentration measured protein concentration 3mg/ml, but after adding buffer found no protein bands appeared, what is the reason?

- Do I need to add extra mercaptoethanol before using this product?

- At what temperature is the pH 7 .4 of RIPA lysis buffer measured?

- Does RIPA lysis buffer contain reducing agents such as DTT or mercaptoethanol?

- Can RIPA lysis buffer continue to be used after being stored unopened at room temperature for 24 hours? Is there a limit to the number of repeated freezing and thawing?

- Is this lysate suitable for extracting mitochondrial proteins?

- What are the temperature requirements for eluting antibodies in an antibody removal solution? Can the same membrane be eluted more than once?

- Can DAPI be excited with laser confocal λ=405nm after staining the nucleus?

- Can this product be used directly for staining of adherent cells? Can it be used to stain live cells and determine apoptosis by staining?

- Do I need to adjust the pH again after diluting EDTA Antigen Repair Solution?

- Will the pH value change after diluting the product into a working solution?

- What solution should be used to dissolve Proteinase K when used? What is the common working concentration? At what degree does it need to be stored?

- Does Proteinase K powder need to be protected from light when storing and weighing?

- Can tumor tissue cuts be stored for a long time after fixation in 4% paraformaldehyde fixative at room temperature?

- Does paraformaldehyde fixation disrupt cell membranes?

- What is the difference between CD62L and CD62?

- After fixing the cells at room temperature for 15 min with this 4% paraformaldehyde, the cell membrane blisters, do I need to change the fixation temperature to 4°C?

- What do I need to be aware of when storing paraformaldehyde? Is it volatile?

- What is the difference between E-AB-F1272D PE Anti-Mouse lL-17AAntibody[17F3] and EAB-F1199D PE Anti-Mouse lL-17AAntibody[TC11-18H10.1] are different?

- How much Tween needs to be added if I want to use this TBS to prepare TBST?

- What are the recommendations for flow assay of mouse macrophages to detect M1 and M2 types of macrophages?

- Is there a requirement for the mouse strain for the cell flow antibody, since we are experimenting with C57BL6N mice, is it special?

- Does this Hematoxylin Staining Solution need to be diluted for use?

- What related reagents are needed for flow-through staining?

- What should I do if I find that this dye solution produces precipitation? How can I tell if the dye has failed?

- What kind of samples need sealer? Should I wash them after sealing?

- What concentration of Triton X-100 is typically needed for cell lysis? What solutions are typically used for dilution?

- Does WB Primary Antibody Diluent contain sodium azide?

- Is the gel stained with this stain compatible with in-gel digestion and mass spectrometry?

- What is the reason that the blue color is hard to come off after half an hour of dyeing glue with this dye solution? How to solve it?

- How does the sealer work?

- How many times can this stain be reused?

- How is the cell staining buffer used and can I use PBS instead? How much cell staining buffer do I need for one sample?

- How should the experimental groupings/controls be set up?

- How do I dilute the Test-packed antibodies?

- What is the difference between our Flow Antibodies available in Test packages and μg packages?

- The Illuminating the Druggable Genome (IDG)

- The amount of cells is not 1x10^6, only 1x10^5, can the amount of antibody be reduced?

- Can flow antibodies be used when stored at -20°C and then thawed?

- Why centrifuge before use?

- What do host species and species reactivity mean, respectively?

- What are the requirements of sample preparation for the use of centrifuges?

- What is the best way to choose between the different clone numbers of CD4?

- What are the markers for sorting Raw264.7 M1 and M2 cells by flow cytometry? Can they be cultured after sorting?

- I want to test for tumor-associated macrophages, is it okay to use only the F4/80 test?

- If I need to label an antibody with SMCC-activated R-phycoerythrinand then use the antibody coupled with phycoerythrin as an ELISA, what other reagents are needed? Is there a specific procedure?

- Can Erythrocyte Lysate be used on avian erythrocytes?

- Can Erythrocyte Lysate be used at room temperature?

- I want to stain CD206 and need to break the membrane, so should I use cytokine fixative or transcription factor fixative?

- Which is a better sealer, mouse sealer CD16/32 or unmodified mouse whole lgG antibody?

- Is it okay to dilute the rupture agent in intracellular fixation rupture agent with cell staining buffer?

- I want to obtain rare cells other than red blood cells and white blood cells in my sample and keep the original cell viability of the rare cells, is this lysate suitable?

- Isoform controls help eliminate nonspecific staining, so how do you set the door?

- Do I need an isotype control for all treatment groups, e.g.: 12h group, 24h group?

- How do I set up an FMO combined isotype control?

- Is the mouse Fc blocker in μg packaging and is it powdery in nature? Does it dissolve in pbs?

- I purchased three flow-through antibodies, all sized at 50 μg, how many samples can I make?

- Do I need to do a live and dead dye on my sample to eliminate dead cells? What will happen if I don't?

- The client's cells themselves are not fluorescent, but the drug being processed is fluorescent, how does the client go about determining what the drug is fluorescent?

- I want to do a spleen flow-through, and I can't flow-through on the machine until tomorrow after picking up the sample today, how should I store the sample?

- I only have 2.5×10^5 cells in my cell sample, do I only need to use 1.25 μL of flow-through antibody?

- How should I remove platelets from my blood? Can red blood cell lysate be used?

- Is calcium ion-containing HBSS an option for the preparation of mouse tumor single cell suspensions?

- What are the precautions for human peripheral blood testing for intracellular factor?

- What are the considerations for testing tissue samples for intracellular indicators?

- There are two orders of cleavage red for sample preparation, cleavage red followed by staining and staining followed by cleavage red, what to choose?

- My samples are peripheral blood and alveolar lavage fluid from mice, how can I preserve the samples for the maximum amount of time after I remove them?

- When do you use sterile supplies for flow-through antibody samples?

- How to Cite Aladdin Scientific Products and Website in Your Published Work?

- I Would like a compatibility table tailored to specific instruments and their configurations for Fluo™ dyes?

- Product Origin Information & FAQ for Aladdin Scientific

- What's different between CHEMBL13035 and SCHEMBL122822, why does SCHEMBL122822 has an extra "S" at the beginning?

- What if I encounter issues using my discount coupon?

- What types of targets are there?

- read more

- Technical articles

- Targeted protein degradation technology: proprietary drugs are not difficult, drug resistance is no longer

- Strain-promoted alkyne-nitrone cycloaddition (SPANC)

- A bridge to protein science——Aladdin@Polypeptide

- Fluorescent probes – brightening the horizon of biomedical research

- Method and Precautions of SDS-PAGE Gel Preparation

- Aladdin® Plant Research Related Products

- DEAE-Dextran

- IgG

- Dextran Chemistry

- liposomes

- Hyaluronan

- Macrophage Stimulating Protein (MSP)

- Click Chemistry

- BINOL and Derivatives

- Linkers - A Crucial Factor in Antibody–Drug Conjugates

- Karl Fischer Titration to Measure the Water Content of Samples that Do not Readily Release Water

- Application of Gold Catalysts in Industrial Hydrogenation Process

- N-Heterocyclic Carbene (NHC) Ligands

- Applications of Nanoparticles in Pathogen Detection and Identification

- Cannizzaro Reaction

- Clemmensen Reduction

- Guide to Adherent Cell Culture Basics: Seeding, Expanding, and Harvesting

- Application of Granular Materials in Immunoassay

- Properties and Applications of Magnetic Nanoparticle

- Wolff-Kishner Reduction

- Fries rearrangement

- Sulfonyl Chlorides and Sulfonamides

- Alzheimer’s Disease Signaling

- DNA Damage and Repair

- How To Improve the Safety of Electrolyte in Lithium-Ion Batteries?

- Research Progress of High-Voltage Lithium-Ion Battery Materials

- Application of Magnetic Nanoparticles in Protein Expression

- Silica-Coated Gold Nanoparticles: Surface Chemistry, Properties, Benefits, and Applications

- Application of albumin

- Friedel–Crafts Acylation

- Immunoprecipitation technology

- Aldol Condensation Reaction

- Basic Concepts about Catalysts

- Baeyer-Villiger Oxidation

- Cinchona Alkaloids

- Application of Magnetic Particles in Organelle Separation

- Nicewicz Photoredox Catalysts for Anti-Markovnikov Alkene Hydrofunctionalization

- Application of Magnetic Nanoparticles in RNA and DNA Separation

- Homobenzotetramisole (HBTM): A General Organocatalyst for Asymmetric Acylations

- Nanoparticle-Based Small Molecule Drug Delivery

- Visible Light Photoredox Catalysts

- Applications of Nanoparticles in Vaccine Delivery

- Markovnikov’s Rule

- Grignard Reagent

- Neurotransmitters, Receptors, and Transporters

- Hydrogenation Catalysts

- Organic Photoredox Catalysts for Visible Light-Driven Polymer and Small Molecule Synthesis

- Knoevenagel Condensation Reaction

- Grignard reaction

- Nanoparticle-Based Gene Delivery

- Regulation of TGF-beta activity by BMP-1

- Retinoic Acid and Gene Expression

- Guide to Sialylation: I Neu5Ac and Neu5Gc Quantitation

- Guide to Sialylation: II Highly Sialylated Glycoproteins

- Quantitative Sialic Acid Analysis

- SuFEx: Sulfonyl Fluorides that Participate in the Next Click Reaction

- Reductive Amination with 2-picoline-borane Complex

- Copper-Free Click Chemistry

- Mesoporous Materials: Properties and Applications

- Media and Supplements in Cell Culture

- Quantum dots

- Materials for Advanced Thermoelectrics

- Application of Graphene in Photocatalysis

- Silver Nanomaterials for Biological Applications

- Stripping and Reprobing Western Blotting Membranes

- Graphene Inks for Printed Electronics

- Procainamide Labeling Kit-2-Picoline Borane-24T

- Procainamide Labeling Kit-Sodium Cyanoborohydride-24T

- Procainamide Labeling Kit-Sodium cyanoborohydride-96T

- Permethylation Kit

- 2-AB Labeling Kits-Sodium Cyanoborohydride

- 2-AB Labeling Kits-2-Picoline Borane

- Buffer Reference Center

- Poly(N-isopropylacrylamide)-based Smart Surfaces for Cell Sheet Tissue Engineering

- Polymer-Clay Nanocomposites: Design and Application of Multi-Functional Materials

- Light-emitting Polymers

- Small RNA modification: important functions and related diseases

- Conductive Polymers for Advanced Micro- and Nano-fabrication Processes

- Nucleic Acid Gel Electrophoresis—A Brief Overview and History

- Continuous-wave InAs/GaAs quantum-dot laser diodes monolithically grown on Si substrate with low threshold current densities

- Application of nucleoside pharmaceutical intermediates

- Nucleic Acid Electrophoresis Workflow—5 Main Steps

- Polyethylene Glycol (PEG) Selection Guide

- Advanced Inorganic Materials for Solid State Lighting

- Biological buffer: the unusual amongst the usual

- Applications of Fullerenes in Bioscience and Optoelectronics

- General Conjugation Protocols of PEG linkers——PEG Amine

- General Conjugation Protocols of PEG linkers——PEG Acid

- General Conjugation Protocols of PEG linkers——PEG-NHS Ester

- General Conjugation Protocols of PEG linkers——PEG Maleimide

- General Conjugation Protocols of PEG linkers——PEG Thiol

- General Conjugation Protocols of PEG linkers——PEG PFP Ester

- General Conjugation Protocols of PEG linkers——PEG SPDP

- General Conjugation Protocols of PEG linkers——PEG Aldehyde

- General Conjugation Protocols of PEG linkers——PEG ONH2

- What are the advantages of glass chromatographic column?

- Handling Method for Possible Abnormalities of D101 Macroporous Resin in Use

- Use process of combined carbonization dialysis bag

- Filling method and precautions of chromatographic column

- What are the material, dialysis power and speed of viskase dialysis bag?

- Can the ready to use dialysis bag be reused? Yes, but not recommended

- Differences between Ni IDA and Ni NTA agarose gels

- Instructions for ready to use CE membrane dialysis bag

- User Manual of Ready to use RC Membrane Dialysis Bag

- Selection of molecular weight (MWCO) and width of dialysis bag

- Construction and application of single-walled carbon nanotube networks

- Nucleotide Synthesis in Cancer Cells

- EGF Signaling: Tracking the path of Cancer

- PEG-Azide-Alkyne—Bioorthogonal and Click Chemistry

- Enzymes and dietary antioxidants

- Functional group protection and deprotection in oligonucleotide synthesis

- Nucleic Acid Electrophoresis Additional Considerations–7 Aspects

- Preparation and functionalized design of novel graphene-based nanostructures

- Calcium indicators and ionophores

- Oligonucleotide synthesis

- Nucleic acid electrophoresis applications - preparative and analytical electrophoresis

- Editing sugar chains on therapeutic proteins with glycosidase

- Popular Semiconductor Materials: Application Introduction of Gallium Arsenide

- Monosaccharide Release and Labeling Kit-96T

- Study on Alkenyl Fluorinated Building Blocks

- Silicon carbide provides technical solutions for the photovoltaic field

- Papain and its application

- Guidelines for troubleshooting nucleic acid electrophoresis

- Semiconductor material band gap know how much?

- Degradable Poly(ethylene glycol) Hydrogels for 2D and 3D Cell Culture

- 2-AA Labeling Kit-Sodium Cyanoborohydride

- Trifluoromethyl in organic synthesis

- 2-AA Labeling Kit-2-Picoline Borane

- V-Tag Glycopeptide Labeling Kit

- Exoglycosidase Clean-up Plate

- JAK-STAT Cell Signaling Pathway

- Versatile Cell Culture Scaffolds via Bio-orthogonal Click Reactions

- CRISPR/Cas9 and targeted genome editing

- BioQuant Monosaccharide Standard

- Antibody-drug Conjugates: A Comprehensive Guide

- Negative Photoresist Lithography Process

- Advanced enzyme analysis technology

- N2 Column

- Enzymatic determination of pepsin

- N1 Column

- C3 Anion Exchange Column

- C2 Strong Anion Exchange Columns

- CEX Cartridges for O-glycans

- S Cartridges

- T1 Cartridges

- Procainamide Cleanup Plate

- Biomolecular NMR: Isotope Labeling Methods for Protein Dynamics Studies

- Culture scheme of neural stem cells

- Dissociation of cells with trypsin

- Semiconductor Materials: A Summary of Questions

- PD-1/PD-L1 Signaling Pathway

- Research progress of introducing difluoromethyl

- Pre-Permethylation Clean-up Plate

- EB10 Cartridges

- EC50 Cartridges

- EC50 plate

- New Uses for Organoids

- Storage and Use Information Guide of NMR Solvents

- Research Progress of Silicon Anode Materials for High Performance Lithium-ion Batteries

- Aryl fluorination

- Research Progress of Cathode Materials for Lithium-Ion Batteries

- PANoptosis: An inflammatory programmed cell death pathway

- Efficient synthesis of Fluorinated Azaindoles

- Stable Isotope Applications

- Aladdin New Product Label——Your Reagent Usage Guide

- Small Molecule Inhibitors Selection Guide

- Fluoroalkylation: Expansion of Togni Reagents

- Common Modification Strategies for Lithium Battery Separators

- Vitamin D and the prevention of human disease

- Biological enzyme catalysis technology and application

- Hydrosilylation and Hydrosilylation Catalyst

- Stable Isotopes in Drug Development and Personalized Medicine: Biomarkers that Reveal Causal Pathway Fluxes and the Dynamics of Biochemical Networks

- Guide for the Selection of Lithium Salts in the Electrolyte of Lithium-Ion Batteries

- Metabolic signaling pathway

- Protein sample preparation process

- FAQ: Karl Fischer Reagent

- Enzyme Probes

- Conversion Lithium Metal Fluoride Batteries

- Ionic Liquid Electrolytes for Li-ion Batteries

- Allenes Building Blocks with ability to participate in multiple reactions

- Solid State Rechargeable Battery

- The Scope of Application of the Karl Fischer Method

- RNA Viruses Triggered Signal Pathway

- Heck Reaction

- Sample Preparation Method of Karl Fischer Reagent in the Pharmaceutical Industry

- Difference Between Karl Fischer Coulometry and Volumetric Method Compare the Difference Between Similar Terms

- Frequently asked Questions about inhibitors and antagonists related products(FAQ)

- Diels–Alder Reaction

- Cytokines and Inflammation

- Macrophage Activation

- Biginelli Reaction

- Macrophage Stimulating Protein (MSP)

- Nanoparticle-Based Nucleic Acid Delivery

- CXCR4: Receptor for extracellular ubiquitin

- TEMPO Catalyzed Oxidations

- Application of Colloidal Gold in Electron Microscope

- Photoredox Iridium Catalyst for Single Electron Transfer (SET) Cross-Coupling

- Application of Nanomaterials in Photoacoustic Imaging

- Applications of imidazole and its derivatives

- Asymmetric Organocatalysts

- MacMillan Imidazolidinone Organocatalysts

- Application of Nanomaterials in Wastewater Treatment

- Proline-type Organocatalysts

- 1,2,4-Triazole Derivatives for Synthesis of Biologically Active Compounds

- Chemical Synthesis Methods of Nanomaterials

- Substituted Azetidines in pharmaceutical chemistry, organic synthesis, and biochemistry

- Bioactive Compounds and Materials Based on 1,3,4-Oxadiazoles Derivatives

- Alcohol Oxidation Catalysts with Higher Activity than TEMPO——AZADOL®

- Chiral Phosphoric Acids——Versatile Organocatalysts with Expanding Applications

- Click Chemistry in Drug Discovery

- How to Prepare Graphene Quantum Dots?

- Soluble Pentacene Precursors

- A New Role for Angiotensin II in Aging

- What is TDS?

- Optimizing Quantum Dots to Maximize Solar Panel Efficiency

- Quantum Dots for Electronics and Energy Applications

- Fluorescent Probes - Absorption and Fluorescence

- Applications of Quantum Dot Technologies in Cancer Detection and Treatment

- Fluorescence Quenching

- Perovskite Quantum Dots (PQDs)

- 1,3-Thiazole building blocks in natural products and synthetic materials

- Dye-Aggregation

- Antibody structure and isotypes

- Realizing High Efficiency in Organic Light Emitting Devices

- Quantum Dots——A Definition, How They Work, Manufacturing, Applications and Their Use In Fighting Cancer

- Loading Controls for Western Blotting

- Flow Cytometry

- Derivatives of 1,3,4-thiadiazoles for Various Applications in Drug Discovery, Agrochemistry, and Materials Technology

- Flow cytometry analysis

- How to choose and use antibodies-primary antibody?

- Quantum Computing - Is it the Future?

- Inorganic Interface Layer Inks for Organic Electronic Applications

- How to find the right secondary antibody?

- Copper(I)-catalyzed azide-alkyne cycloaddition (CuAAC)

- How to Choose the Correct Reference Material Quality Grade?

- Antibody applications and techniques

- Reactions of strained alkenes in click chemistry

- Strain-promoted azide-alkyne cycloaddition (SPAAC)

- Determination of Additives in Beverages

- Potential Applications in Click Chemistry

- Click Chemistry

- Fluid chemistry: Lighting the fire of hope for the large-scale production of anticancer drugs

- Magnetic Resonance Imaging

- Synthesis of molecular block “two-sided” indole

- New Synthesis Method of New Nickel Reagent and Boric Acid (Ester)

- New Breakthrough in the "Transformation" Technology of Pentane Skeleton Drugs: One-Pot Synthesis of Difluorobicyclo[1.1.1]pentanes

- Ellman's Sulfinamides

- The application of click chemistry in chemical ligation and peptide modification

- One of the "Golden Triangle" series of building blocks: acridine-based photoaffinity probes with "big" applications in a small body

- Innovations in the design of stereospecific drug molecular structures: Spirocyclic Scaffolds

- Spiralane: opening a new chapter of non-benzene pharmacy molecules

- Halogen Bond: Leading Drug Design into a New Chapter

- D-DTTA Salts of Azaindole Chiral Amines: New Options for Chemical Splitting

- Cyclic isomers--Azabicyclic molecular building blocks to aid drug design

- IACS-52825: A Potent and Selective DLK Inhibitor

- Action type of ligand acting on target

- Targeted drug discovery: scarce and inefficient, what is the geometry of its effectiveness?

- Explore the Industrialization Process of SOS1 Inhibitor MRTX0902

- The process path of EBL-3183: from indole to preclinical inhibitor

- ROS1 Fusion Inhibitor NVL-520 with MGAT2 Inhibitor BMS-963272

- GDC-1971: SHP2 Inhibitor Ushering in a New Era of Cancer Treatment

- CC-92480,Dalcetrapib,Tovorafenib and AZD4604

- Immunocytochemistry (ICC) protocol

- Aladdin® Reducing Agents

- Oxidizing Agents

- Antibody-coupled drug ADCs: Precise guidance, cancer destruction

- Thioacetic acid (AcSH)

- Ammonia borane/Borane ammonia complex

- Alcohol dehydrogenase (ADH)

- Acetone

- 9-Azabicyclo[3.3.1]nonane N-Oxyl, ABNO

- Ascorbic Acid; Vitamin C

- Interleukins - the communications arm of the immune response

- Tetrahydroxydiboron/Bis-Boric Acid/BBA/B2(OH)4

- 9-Borabicyclo[3.3.1]nonane / 9-BBN

- Acrylonitrile

- Ammonium peroxydisulfate

- 1,4-Benzoquinone, BQ

- Aladdin@Guidelines for the formulation of commonly used buffers for biological experiments

- Benzaldehyde

- Oxidizing Agent-Benzaldehyde

- Benzophenone

- Benzyl Alcohol

- Bis(neopentylglycolato)diboron, B2nep2

- Antibiotics block bacterial protein synthesis

- Benzoyl Peroxide (BPO)

- Sodium Hypochlorite (NaOCl)

- N-Bromosaccharin

- Bis(pinacolato)diboron, B2pin2

- Guidelines For Common Cell Culture Media

- Boranes (Dimethylsulfide Borane, Borane-Tetrahydrofuran Complex)

- Trimethylamine borane / Borane trimethylamine complex

- N-Bromosuccinimide (NBS)

- Burgess reagent

- Crotononitrile, (E)-But-2-enenitrile

- 108 SadPhos, Find Your Own Chiral Phosphine Ligands/Catalysts

- Phytohormones commonly used in tissue culture and their functions

- Hypophosphorous acid and hypophosphite salts

- sec-Butanol, 2-Butanol

- N-Fluoro-2,4,6-trimethylpyridinium triflate

- Catecholborane

- 1,4-Cyclohexadiene, CHD

- N-tert-butylbenzenesulfinimidoyl chloride

- tert-Butyl hydroperoxide, TBHP

- Agrobacterium reforming process, the key technology of plant genetic engineering

- Tert-Butyl Nitrite (TBN)

- Recent Advances in the Catalytic Transformations to Access Alkylsulfonyl Fluorides as SuFEx Click Hubs

- Elemental Analysis of Lithium Salts Utilizing ICP-MS Technology, Integrated with Argon Gas Dilution (AGD) Methodology

- Utilizing ICP-OES for Evaluating the Purity Levels of Lithium Carbonate and Lithium Hydroxide

- Tert-Butyl peroxybenzoate, TBPB

- The Key to Gene Delivery to Cells —In Vitro Cell Transfection Techniques

- Determination of chloride and sulfate in saturated lithium hydroxide solution

- Innovative Materials for Glass Ionomer Cements (GICs): Dental Applications

- C–H Amination

- Diethoxymethylsilane, DEMS

- Greener Methods: Catalytic Amide Bond Formation

- Lipopolysaccharide (LPS): The Bacterial Outer Membrane

- Antimicrobial Peptides: A Promising Field in Antibiotic Research

- Tetrabromomethane, CBr4

- Cerium Ammonium Nitrate, CAN

- DIBAL-H, Diisobutylaluminium hydride

- FITC-Labeled Polysaccharides—— The Polysaccharide World Under Fluorescent Probes

- Chloramine-B, N-chlorobenzenesulfonamide sodium salt

- Diethylsilane

- 1,3-Dimethylimidazol-2-ylidene borane (diMe-Imd-BH3)

- Chloramine-T, N-chloro tosylamide sodium salt

- Chloranil, Tetrachloro-1,4-benzoquinone

- Stimulus-responsive materials for intelligent drug delivery systems

- Ethidium Bromide: Beautiful and Dangerous

- Jørgensen’s Organocatalysts

- Solid-Phase Peptide Synthesis (SPPS) — Efficient Strategies and Innovative Techniques

- Rh2(esp)2 Catalyst

- 1, 4-Dioxane

- Poly(Glycerol Sebacate) in Tissue Engineering

- Diphenylsilane

- Chromium Compounds

- Choline peroxydisulfate (ChPS)

- Synthesis and Metabolism of Glutamate and GABA: Core Mechanisms of Neurotransmitters

- Immunohistochemical Antibody Selection Points

- 3-Chloroperoxybenzoic acid, m-CPBA

- Chromium Trioxide (CrO3)

- Principles of Western Blot Internal Reference Antibody Selection

- Second Antibody Selection Guide

- How to choose antibodies wisely?

- Selection of Mouse-Specific Cell Surface Markers

- Methods of selecting isotype controls in flow experiments

- How do you really choose flow antibodies? What to look for?

- How to Choose Flow Antibodies?

- Selection of fluorescent dyes for multicolor flow-through experiments

- Common sense in the purchase of flow antibodies

- Preservation methods and precautions for common flow-through samples

- Protocol for the preparation of single-cell suspensions of common tissue samples in flow experiments

- Characterization and application scenarios of various enzymes in the preparation of tissue single-cell suspensions

- Dimethoxymethylsilane, DMMS

- RAFT Polymerization

- Ethanol

- Peripheral blood anticoagulant, EDTA or heparin?

- Scenarios of application of different preparation methods for peripheral blood

- MCAT-53™ Catalyst for Ruthenium Formation

- Diversinates™: Any-Stage Functionalization of (Hetero)aromatic Scaffolds

- The Key Molecule in Neurotransmission—Acetylcholine

- Gold Nanoparticles: Properties and Applications

- Biological functions of somatostatin and its receptors

- The Importance of L-Tyrosine in Laboratory Research and Cell Culture

- Aladdin®Oxidation Reagents

- Iron (low valent)

- Formaldehyde

- Solvothermal Synthesis of Nanoparticles

- Cumene Hydroperoxide (CMHP, CHP)

- Pyridinium Chlorochromate (PCC) Corey-Suggs Reagent

- Buffer preparation guide

- Immunohistochemistry FAQ Analysis

- Overview of Organs-on-Chips

- How to efficiently and accurately add samples for rt-qPCR?

- Chelating Agents

- What is the reason for the dirty background in the WB test? How can I fix it?

- Linear range of protein separation with different concentrations of separating gel

- The sampling amount of each component corresponding to the volume of different concentrated glues

- Western Blot Various components corresponding to different volumes of separation gel

- Immunohistochemistry kit experimental procedure

- Cell nuclear factor staining process

- Steps of paraffin section immunohistochemistry

- Guide to immunofluorescence experiments

- Guide to immunoprecipitation experiments

- Western Blot FAQ Analysis

- Introduction to the principles related to the immunoblotting experiment

- IHC principle, operation and precautions

- Analysis and handling of IHC common problems

- Western experimental procedure

- A Comprehensive Technical Article on Endoplasmic Reticulum and Organelle Staining Reagents

- Cytoskeletal Staining Reagents

- Aladdin® Target Proteins

- Electrochemical Allylic C-H Oxidation with N-Hydroxytetrachlorophthalimide (TCNHPI)

- How to prevent and mitigate the deterioration of chemical reagents?

- Environmental conditions for storing laboratory chemicals

- Hydrazine

- White Catalyst

- Flow cytometry sample preparation techniques

- Preparation and precautions for single-cell suspensions of mouse tumor samples

- Procedures and precautions for preparation of mouse thymus single cell suspension

- Procedures and precautions for the preparation of mouse peripheral blood single-cell suspensions

- Procedures and precautions for the preparation of mouse spleen single-cell suspensions

- Preparation and precautions for mouse lymph node single-cell suspensions

- Mouse Bone Marrow Single Cell Suspension Preparation Procedures and Precautions

- Preparation of mouse ascites and single cell suspension and precautions

- Procedures and precautions for the preparation of human peripheral blood monocyte suspensions

- Preparation and precautions for human peripheral blood PBMCs

- Intranuclear Factor Staining Procedure

- Flow cytometric surface staining procedure

- Common Problems and Solutions for Flow Experiments

- Flow cytometry procedure

- Aladdin®Compound Libraries

- Dicumyl Peroxide (DCP)

- Indium (low valent)

- Aladdin®Metabolites

- 1,3-Dibromo-5,5-Dimethylhydantoin, DBDMH

- Aladdin®Natural Products

- Aladdin® Loading Controls

- Aladdin®Grignard Reagents

- LAH, Lithium aluminum hydride, Lithium tetrahydridoaluminate

- Lithium triethylborohydride, LiTEBH, Superhydride

- Designing Temperature and pH Sensitive NIPAM Based Polymers

- Diethyl azodicarboxylate (DEAD)

- Aladdin®Inorganic ligands

- Lithium

- 2,3-Dichloro-5,6-Dicyanobenzoquinone, DDQ

- Aladdin®Ligands of Immunopharmacology

- Aladdin®Antibiotics Commonly Used in Experiments

- Aladdin®Peptide ligands

- Lithium tri-tert-butoxyaluminum hydride, LTBA

- Aladdin®Halogenation Reagents

- 1-Hydrosilatrane

- Fluorescent Microparticles and Nanobeads

- Diethyl allyl phosphate, allyl diethyl phosphate (DEAP)

- Dendrons and Hyperbranched Polymers: Multifunctional Tools for Materials Chemistry

- 1,3-Diiodo-5,5-Dimethylhydantoin, DIH

- The Fibroblast Development Factor (FGF) Family

- Wnt/β-Catenin Signaling Pathway

- Comprehensive Overview of Vascular Endothelial Gth Factors (VEGF)

- Aladdin® Synthetic Organic Ligands

- 3-Mercaptopropionic acid, 3-MPA

- Magnesium

- Single-Electron Oxidation-Induced Chemical Transformations: Carbon-Carbon Bond Formation and Selective Oxaziridine Rearrangement

- Diisopropyl azodicarboxylate (DIAD)

- Manganese

- Methanol

- Dimethyl sulfoxide (DMSO)

- Alkaline Phosphatase Substrate Detection System

- 2,5-di-tert-butyl-1,4-benzoquinone (2,5-DTBQ)

- Dess-Martin periodinane (DMP)

- 3D/4D Printing Technologies

- Peroxidase Substrate Detection System

- PCR Laboratory Contamination Prevention and Efficient Reagent Application: —Optimizing Experimental Steps and Choosing

- Yeast Two-Hybrid (Y2H) Technology

- Spirocyclic Building Blocks for Scaffold Assembly

- α-Picoline-borane, 2-Methylpyridine borane, PICB

- Molybdenum hexacarbonyl

- Inkjet Printing for Printed Electronics

- Neodymium (low valent)

- RAFT: Choosing the Right Agent to Achieve Controlled Polymerization

- Nickel

- Tetramethyl-1,4-benzoquinone(DQ)

- Applications of Flavors and Fragrances in the Food Industry

- Di-tert-butyl peroxide (DTBP)

- 3,3',5,5'-tetra-tert-butyldiphenoquinone (DPQ)

- Introduction to Various Types of PCR Technology

- Non-viral Vectors for Gene Transfection: Polyethyleneimine

- The Technology Driving Biomedical Revolution — Animal Modeling

- Folate Metabolism and Cancer: Biological Mechanisms and Therapeutic Strategies

- Niobium

- Phosphorous acid

- Ellman's Sulfinamides

- Dextran: Multifunctional polysaccharides for biology and medicine

- read more

- Protocols

- Wolfe's Mineral Solution

- Alkaline Lysis Buffers A, B, C Recipes

- A Broth (Powder)

- Acrylamide (30%) Recipe

- Amies Broth with Charcoal (Amies Transport Medium) (Powder)

- Acrylamide (40%) Recipe

- 5-Fluoroorotic Acid Monohydrate (FOA, 5-FOA)

- Antibiotic Medium #1 (Powder)

- Bradford's Reagent Recipe

- Cell Culture Protocols

- Antibiotic Medium #3 (Powder)

- Antibiotic Medium #4 (Powder)

- Cell Staining Buffer Recipe

- Cell Stimulation Cocktail

- Antibiotic Medium #9 (Powder)

- Bromthymol Blue Recipe

- Antibiotic Medium #10 (Powder)

- CFSE Protocol

- Antibiotic Medium #11 (Powder)

- APT (All Purpose Tween) Agar (Powder)

- Azide Dextrose Broth (Powder)

- Bacillus cereus Medium (BCM) (Powder)

- Baird Parker Agar (Powder)

- BiGGY Agar (Powder)

- Bile Esculin Agar (Powder)

- Bismuth Sulfite Agar (Powder)

- Blood Agar Base No. 2 (Powder)

- Blood Agar Base, Low pH (Powder)

- Brain Heart Infusion Agar (Powder)

- Brain Heart Infusion Broth (Powder)

- Brain Heart Infusion Broth w/o Dextrose (Powder)

- Brilliant Green Agar (Powder)

- Brilliant Green Agar w/Sulfadiazine (Powder)

- Brilliant Green Bile Broth 2% (Powder)

- Bromthymol Blue

- Brucella Agar (Powder)

- Brucella Broth (Powder)

- Buffered Peptone Water (Powder)

- Campy Selective Agar Base (Powder)

- Casman Medium Base (Powder)

- Chapman Stone Medium (Powder)

- CLED Agar (Powder)

- CLED Agar, Bevis (Powder)

- Clostridium Difficile Agar (Powder)

- Clostrisel Agar (Powder)

- Columbia Blood Agar Base (Powder)

- Columbia CNA Agar (Powder)

- Cooked Meat Medium (Powder)

- Corn Meal Agar (Powder)

- Decarboxylase Broth Moeller (Powder)

- D/E Neutralizing Agar (Powder)

- Deoxycholate Agar (Powder)

- Deoxycholate Citrate Agar (Powder)

- Coomassie Blue Solution Recipe

- Denhardt Solution (50x) Recipe

- Deoxycholate Citrate Lactose Sucrose (DCLS) Agar (Powder)

- DEPC Treated Water Recipe

- Destain Solution Recipe

- DNA Loading Buffer (Orange G) Recipe

- DOT Blot Protocol

- ELISA Blocking Solution Recipe

- ELISA Coating Solution Recipe

- ELISA Methods

- Deoxycholate Lactose Agar (Powder)

- Dermatophyte Test Medium (DTM Test Agar) (Powder)

- Dextrose Agar (Powder)

- Dextrose Broth (Powder)

- ELISA Sample Collection & Storage

- Flow Cytometry General Protocol

- Glycerol Tolerant Gel Buffer 20X

- Immunocytochemistry

- Immunofluorescence General Protocol

- Immunohistochemistry General Protocols

- Dextrose Phosphate Broth (Powder)

- Dextrose Starch Agar (Powder)

- Immunoprecipitation General Protocol

- Dextrose Tryptone Agar (Powder)

- Dextrose Tryptone Broth (Powder)

- Deoxyribonuclease (DNase) Test Agar (Powder)

- Immunoprecipitation using Affinity Agarose Resins

- Deoxyribonuclease (DNase) Test Agar w/Toludine Blue (Powder)

- Laemmli Sample Buffer 2X

- Modified RIPA Buffer

- NADI Reagent

- Earle’s Balanced Salts (Powder)

- EC Medium (Powder)

- EC Medium w/MUG (Powder)

- NP-40 Cell Lysis Buffer

- Nuclease P1 from Penicillium Citrinum

- Elliker Broth (Powder)

- Pepsin

- Eosin Methylene Blue Agar (Powder)

- Phosphate Buffered Saline (PBS) 1x

- Eosin Methylene Blue Agar, Levine (Powder)

- Phosphate Buffered Saline (PBS) 10x

- Eugonic Broth (Powder)

- Phosphate Buffered Saline Tween-20 (PBST) 1x

- Red Blood Cell Lysing

- Red Blood Cell (RBC) Lysis Buffer

- RNA Extraction

- TAE 50x

- TBE 10x

- TE 1x

- TES

- Tissue Homogenization Buffer for ELISA

- TNE 1x

- Formaldehyde Gel Running Buffer

- Fluid Thioglycollate Medium w/K Agar (Powder)

- H Broth (Powder)

- L-Broth (Luria Broth) (Powder)

- Lambda Agar (Powder)

- Lambda Broth (Powder)

- LB Agar Lennox (Powder)

- Transfection Protocol

- LB Agar Lennox, Animal Free (Powder) (Lennox L agar)

- Tris Glycine Buffer 5x

- Tris-Buffered Saline (TBS)

- Tris-Buffered Saline Tween-20 (TBST)

- LB Agar Miller (Powder)

- TTE 1x

- Western Blot

- LB Agar Miller, Animal Free (Powder) (Miller's LB agar, Luria-Bertani agar)

- Western Blotting Transfer Buffer

- X-Gal Staining Solution

- Xylene Cyanol/Bromophenol Blue DNA Loading Buffer 10x

- Zymolyase

- LB Broth Lennox (Powder)

- LB Broth Lennox, Animal Free (Powder) (Lennox L broth base)

- LB Broth Miller (Powder)

- LB Broth Miller, Animal Free (Powder) (Miller's LB broth, Luria-Bertani broth)

- LPGA Agar Medium

- M63 Medium (Powder)

- M9 Minimal Salts (Powder)

- Nutrient Agar (Powder)

- Nutrient Agar 1.5% (Powder)

- Nutrient Broth (Powder)

- NZ Broth (Powder)

- NZC Broth (Powder)

- NZCYM Agar (Powder)

- NZM-Agar-Powder

- NZM Broth (Powder)

- NZYM Agar (Powder)

- NZYM Broth (Powder)

- SOB Agar (Powder)

- SOB Broth (Powder)

- SOC Broth (Powder)

- Sodium Citrate Buffer

- SSPE 20X

- STAB Agar (Powder)

- STET

- Super Broth (Powder)

- Terrific Broth (Powder)

- Terrific Broth, Complete with Carbon Source (Powder)

- Terrific Broth, Modified for Genomics (Powder)

- Terrific Broth, Modified for Fermentation, non-animal (Powder)

- Thermophilus Vitamin-Mineral Stock 100X (TYE Media, Castenholz Media) (Powder)

- Thermophilus Vitamin-Mineral Stock 1000X (TYE Media, Castenholz Media) (Powder)

- Tryptone Agar (Powder)

- Tryptone Broth (Powder)

- Two YT Broth (TY Broth) (Powder)

- Yeast Malt Extract Broth

- YT Broth (Powder)

- Synthetic Sea Water

- Synthetic Amino Acid Medium, Bacteriological, SAAM-B (Powder)

- Synthetic Amino Acid Medium, Fungal, SAAM-F (Powder)

- Culture Conditions and Types of Growth Media for Mammalian Cells

- Staining Dead Cells with Viability Dyes

- B5 Fixative Recipe

- Bouin's Fixative Recipe

- Ehrlich's Solution Recipe

- Field's Stain

- Flagella Stain

- Gentian Violet Stain

- Giemsa Stain

- Hellings 10X

- Intracellular Antigen Staining

- Kovac's Reagent

- Papanicolaou's Stain

- Paraformaldehyde (2%)

- Schiff's Reagent

- Tissue Fixation Solution

- Tissue Lysates Preparation

- Trypan Blue

- Zenker's Fixative

- PCR, Real-Time

- RT-PCR

- Hollande's Fixative

- Blood Agar Base, pH 7.4 (Powder)

- PCR, MIQE Guidelines

- Flow cytometry protocol

- Immunoprecipitation (IP) lysates and reagents

- Native ChIP (N-ChIP) Protocol

- Protocol and tips for whole mount immunohistochemical staining

- Flow cytometry cell cycle analysis using propidium iodide DNA staining

- Cross-linking ChIP-Seq (X-ChIP-Seq) protocol

- Chromatin preparation from tissues for chromatin immunoprecipitation (ChIP)

- Experimental Guide of Sample Preparation for Flow Cytometry

- Immunohistochemistry staining with samples in paraffin (IHC-P)

- Immunohistochemical staining protocol for frozen samples (IHC-Fr)

- RNA dot blot protocol

- Immunohistochemistry (IHC) staining antigen retrieval protocol

- GST Pull-Down Protocol

- In-cell ELISA protocol

- Enzyme-linked Immunospot Technique ( ELISPOT ) Protocol

- Immunopeptide blocking assay

- Fura-2 AM Staining Protocol

- Annexin V-FITC staining to detect cell apoptosis

- Guidelines of Primary Antibodies for Western Blotting

- BrdU staining protocol

- Caspase Immunofluorescence Staining Protocol

- Automated Immunohistochemical Staining Protocol for Anti-PD-L1

- Subcellular Fractionation Protocols

- BMDC Isolation Method

- Boc resin lysis protocol

- Competent E. coli Cell Preparation Using the Calcium Chloride Method

- General Protocol for Western Blotting

- Methods of Lysate Preparation for Western Blotting

- Western Blotting with Fluorescently Labeled Antibodies

- E. coli Competence Protocol for Plasmid Transformation

- BN-PAGE Protocol

- Histone Western Blot

- Amine-Reactive Probe Labeling Experimental Protocol

- Protocol of Western blot for Phosphorylated Proteins

- Immunoprecipitation Protocol for Native Proteins

- Surfactant-Free Nuclear Protein Extraction Protocol

- Instructions for Selecting Compounds and Creating a Customized Screening Library

- Protocol of Western Blotting For High Molecular Weights

- General Immunoprecipitation Protocol

- Immunofluorescence Staining Protocol (Methanol permeabilization)

- Protein Dephosphorylation Protocol

- Protein purification experiments

- Angiogenesis experiment

- Isolation and culture of osteoclasts

- Spot immunofiltration assay

- Determination of Minimum Inhibitory Concentration (MIC)

- Amino acid content measurement experiment

- HIV drug resistance testing

- cell irradiation culture

- Expression purification of endolysin

- Construction of a subcutaneous hormonal tumor model of melanoma

- RNA Immunoprecipitation (RIP) Protocol

- In Situ Hybridization (ISH) Protocol

- Activation and monitoring of inflammatory vesicles

- Protein expression system construction

- Eukaryotic expression of proteins

- Dual luciferase reporter assay

- Acid phosphatase activity assay

- Protein Oligomerization Assay

- Yeast two-hybrid technology

- Electrophoretic separation of proteins

- Analysis of amino acid metabolites

- Lipid metabolite analysis

- CUT&RUN-seq protocol

- Pentose phosphate pathway

- Determination of key enzymes and important products in the anaerobic oxidation pathway

- X-ray crystallography

- GST pull-down analysis

- Experiments for the determination of the isoelectric point of proteins

- Experiments for precise molecular weight determination of proteins

- Determination of total protein content based on protein light absorption properties

- Determination of total protein content based on protein elemental composition

- Experiment to determine the content of cell membrane proteins

- Determination of NADPH-cytochrome C (P-450) activity

- Preparation of DNAase I Footprint Probes

- Elution from PEI precipitation σ32 and RNA polymerase assay

- Dialyzed to obtain soluble refolding σ32 Monomer experiments

- Experimental determination of protein concentration by bicinchoninic acid (BCA) assay

- Identification of IgG purity by polyacrylamide gel electrophoresis

- Purification of PPO crude enzyme solution

- Reversible staining of proteins on PVDF membranes

- TNF-α Biological Activity Assay

- Extraction and activity of ATPase from cardiac myofibrillar fibers

- Determination of substrate binding spectra to hepatic cytochrome P-450

- Heparanase Lowry colorimetric assay

- Expression and purification experiments of LacZ and trpE fusion proteins

- Separation of lactate dehydrogenase isozymes by agarose gel electrophoresis assay

- Isolation and purification of animal genomic DNA

- Tm value measurement of DNA

- Extraction of total plant RNA

- Formaldehyde denaturing gel electrophoresis to identify RNA experiment

- DEAE cellulose column chromatography purification of enzyme protein experiment

- Determination of sucrase activity at various levels and calculation of purification rate experiments

- Carrier amphoteric electrolyte pH gradient isoelectric focusing experiment

- Dissolution, refolding and ion exchange chromatography experiments on inclusion body precipitates (σ32)

- Quantitative SDS gel staining and scanning

- UV spectra of pure proteins A280 nm/A260 nm Experimental

- Measurement of extinction coefficients (Scopes method)

- Dialysis for the removal of sodium N-dodecyl sarcosinate

- Protein kinase C isoform analysis experiment

- Recombinant MVA in vivo immunostaining assay

- Affinity purification assay of GST fusion proteins

- Far Western analysis of protein interaction experiments

- RIP technology

- Fluorescent staining of frozen sections for amyloid (AMYLOID) thioflavine T

- Biopharmaceutical Glycosylation Site Assay

- Total protein extraction from adipose tissue and cells

- Determination of protein concentration by BCA method

- Experiments on the preparation of yeast extracts

- Experiments on lysis of recombinant proteins of Escherichia coli from inclusion bodies

- Experiments on bulk extraction of recombinant proteins from bacteria

- Experiments on lysis of cultured cells by depressurization of nitrogen compartments

- Lysis experiments of cultured animal cells, yeast and bacteria for immunoblotting

- Phosphorylation Site Analysis Experiment - Determination of Phosphorylated Amino Acid Types

- Dissolution and reduction of samples for two-dimensional polyacrylamide gel electrophoresis

- Experiments on the purification of proteins by filtration chromatography using filter gel

- Experiments on the purification of proteins by ion exchange chromatography

- Interaction cloning experiments

- Protein expression experiments in mammalian cells

- Dye-matched base chromatography for protein purification experiments

- Experiments on protein purification by lectin affinity chromatography

- Immunopurification experiments

- Preparation of affinity columns

- Batch Chromatography for Protein Concentration

- Capillary electrophoretic analysis of proteins

- Discontinuous nondenaturing coagulation limb electrophoresis experiments

- Experimental determination of protein concentration by spotted filter membrane binding method

- Determination of protein concentration by Lowry's assay

- Solubilization and replication experiments of inclusion body proteins

- Chemical degradation of fusion proteins

- Fusion Protein Enzymolysis Assay

- Experiments for direct analysis of kinases in gels

- Tyrosine kinase analysis assay

- Ca2+/calmodulin-dependent kinase analysis assay

- Cyclic nucleotide-dependent protein kinase analysis assay

- Preparation of monoclonal antibodies against phosphopeptides.

- Detection of specific amino acids in phosphopeptides by secondary discriminatory digestion assay

- Determination of phosphorylated amino acid positions in peptides

- Analyzing peptide separation experiments

- Isoelectric spot focusing for protein separation

- Protein purity assay

- Protein elution experiments in gels

- Purification experiments of membrane proteins

- Identification, production and use of polyhydroxy-reactive monoclonal antibodies - for immunoaffinity chromatography

- Protein gel staining assay

- Hydroxyapatite columns for protein chromatography experiments

- Protein precipitation technology

- Gel filtration experiment

- Experiments on the preparation of biological extracts required for protein purification

- Labeling experiments for protein expression

- Refolding experiments after inclusion body proteolysis

- Experiments on the preparation of recombinant proteins by transient gene transfer in mammalian cells

- Arbovirus-Insect Cell Expression System

- Selection of suitable methods for recombinant protein expression

- Bacterial expression systems for exogenous protein production

- Protein concentration and solute removal experiments

- Maintenance of protein stability

- A proteomic approach to the risk assessment of peroxisome proliferating contaminants in the marine environment

- Proteomic analysis of AtT20 pituitary cells as a model for neuroendocrine peptidergic system interference

- Experiments on methods of applying end truncation, evolution, and re-elongation techniques to improve enzyme stability

- Synthesis of a parsimonious evolutionary library of the Ras-binding structural domain of the Raf protein and cloning experiments for rapid screening of dihydrofolate reductase using the fragment complementation method

- Experiments in compartmentalized self-replication (a new approach to the directed evolution of polymerases and other enzymes)

- Ribosome Inactivation Demonstration System Experiment

- M13 Experiments on the application of phage coat protein modification in improved phage display technology

- Simplified oligonucleotide gene mixing assay

- DNA fragmentation and directed evolution experiments using nucleotide exchange and shearing techniques

- Design and screening of protein libraries - a probabilistic calculation experiment

- Site-specific nucleic acid endonucleases for protein engineering experiments

- Antibody simulation experiments based on the framework of fibronectin type III structural domains

- Design and synthesis experiments of artificial zinc finger proteins

- Calcium indicator experiments based on the fusion of calmodulin and fluorescent protein

- Experiments on design and optimization techniques for coiled spiral structures

- Integral adulteration experiments of non-natural amino acids in Escherichia coli

- Experiments on combinatorial protein design strategies using computational methods

- Determination of isoelectric point of proteins by isoelectric focusing electrophoresis

- SDS-PAGE protein sample preparation

- Peptide isolation and purification experiments for protein internal sequence analysis

- Separation of phosphopeptides with iminodiacetic acid (iron chelate) Sepharose 6B

- Performic acid oxidation experiments on proteins

- Acidified acetone/methanol precipitation method experiment

- Precipitation experiment with trichloroacetic acid

- silver dyeing experiments

- Analytical experiments on glycosylation of insulin receptors

- Autophosphorylation experiments on insulin receptor stimulated by insulin

- Cross-linking experiments of the insulin receptor with [125I]insulin

- Coupling experiments of ligands with activation media

- Insulin affinity chromatography assay

- Lectin affinity chromatography experiments on solubilized receptors

- Screening experiments of descaling agents for solubilizing membrane proteins

- Insulin receptor solubilization and activity assay experiments

- Experiments for the determination of the number of insulin receptors on membranes

- Measurement of protein concentration in the presence of interfering substances

- An alternative experimental approach to the isolation of crude plasma membrane fractions from tissues

- Neville's method for the separation of liver plasma membrane

- New purification experiments of bacterial overexpression products (without disulfide bonds)

- Protein binding assay with immunoaffinity mediators

- Precipitation σ32 and RNA polymerase assays

- Inclusion body precipitation dissolution assay

- Rapid protein spot blotting assay

- Evaluation of homogeneity experiments by nondenaturing gel electrophoresis mobility shift rate method

- Purity estimation experiment by overloaded stained SDS gel scanning method

- Enzyme assay experiment

- Quantitative protein spot blotting assay

- Quantitative measurement of proteins

- immunoaffinity chromatography

- Experiments on the fragmentation of Escherichia coli cells and preparation of inclusion bodies

- Heparin-Sepharose CL-2B preparation experiment

- Gel mobility change analysis experiment

- Operational experiments in affinity chromatography

- Preparation of DNA affinity media

- Experiments on purification of oligonucleotides by preparative gel electrophoresis

- Sephacryl S-300 HR column chromatography of HeLa cell nucleus extracts

- How to load gel filtration column experiment?

- Experiments on the preparation of nuclear extracts from HeLa cells

- Trypsin digestion assay of calmodulin

- Cyanogen bromide cleavage assay of calmodulin

- Urea gradient polyacrylamide gel electrophoresis and folding analysis experiments

- Phenyl-Sepharose chromatographic assay of calmodulin

- DEAE SEPHADEX A-50 anion-exchange chromatography assay for calmodulin

- Crude graded separation experiments of calmodulin

- Experimental extraction of calmodulin from chicken gizzard

- Activation assay of calmodulin samples

- Titration curve experiment

- Polyacrylamide gel electrophoresis of serum proteins

- Serum lipoprotein agarose electrophoresis assay

- Protein ultraviolet spectrometry assay

- Protein sequence analysis and structure prediction experiments

- The effect of substrate concentration on catalytic reaction rate and the determination of Mie constant Km and maximum reaction velocity Vmax

- Experiments on the preparation of yeast sucrase

- Separation of nucleotides by chromatography on ion exchange columns

- Analysis of N-terminal amino acids of proteins by dansulfonylation method

- Electrophoretic separation and identification of proteins on cellulose acetate membranes

- Molecular weight determination of proteins by gel filtration chromatography experiment

- Salt chromatographic graded separation and gel chromatographic desalting of proteins

- Electrotransfer experiments on proteins

- Phosphate amino acid analysis experiment

- Experiments to determine peak protein production

- Metabolic labeling experiments of recombinant proteins

- Expression and purification experiments of thioredoxin fusion protein

- Expression and purification experiments of glutathione S-transferase fusion protein

- Probe synthesis experiments

- Capture experiments of interacting proteins

- Characterization of decoy proteins

- Molecular exclusion high performance liquid chromatography experiments

- affinity chromatography

- Protein recovery assay

- Immunoscreening assay

- Immunofluorescence localization experiments of nuclear proteins

- Metallothionein Measurement Experiment

- Determination of the activity of myocardial sarcoplasmic reticulum calcium-triphosphate adenosine monophosphatase

- Extraction of myocardial sarcoplasmic reticulum calcium-triphosphate adenosine monophosphatase

- Determination of sodium, potassium-triphosphate adenosine triphosphatase activity

- Induction of heparanase

- Determination of aniline-4-hydroxylase activity

- Determination of glucuronide-transferase activity

- Determination of glutathione-S-transferase activity

- Determination of tissue level metallothionein by cadmium-hemoglobin method

- Determination of urease activity in soybean meal

- Protein extraction and crude separation experiments

- ion exchange chromatography

- High performance reversed-phase liquid chromatography

- High Performance Resistance Liquid Chromatography

- Solid phase synthesis, cleavage and purification of peptides

- Nuclease protection assay

- vise

- Serum Preparation Experiment

- Serum Protein Agarose Gel Electrophoresis

- Measurement of plasma free hemoglobin